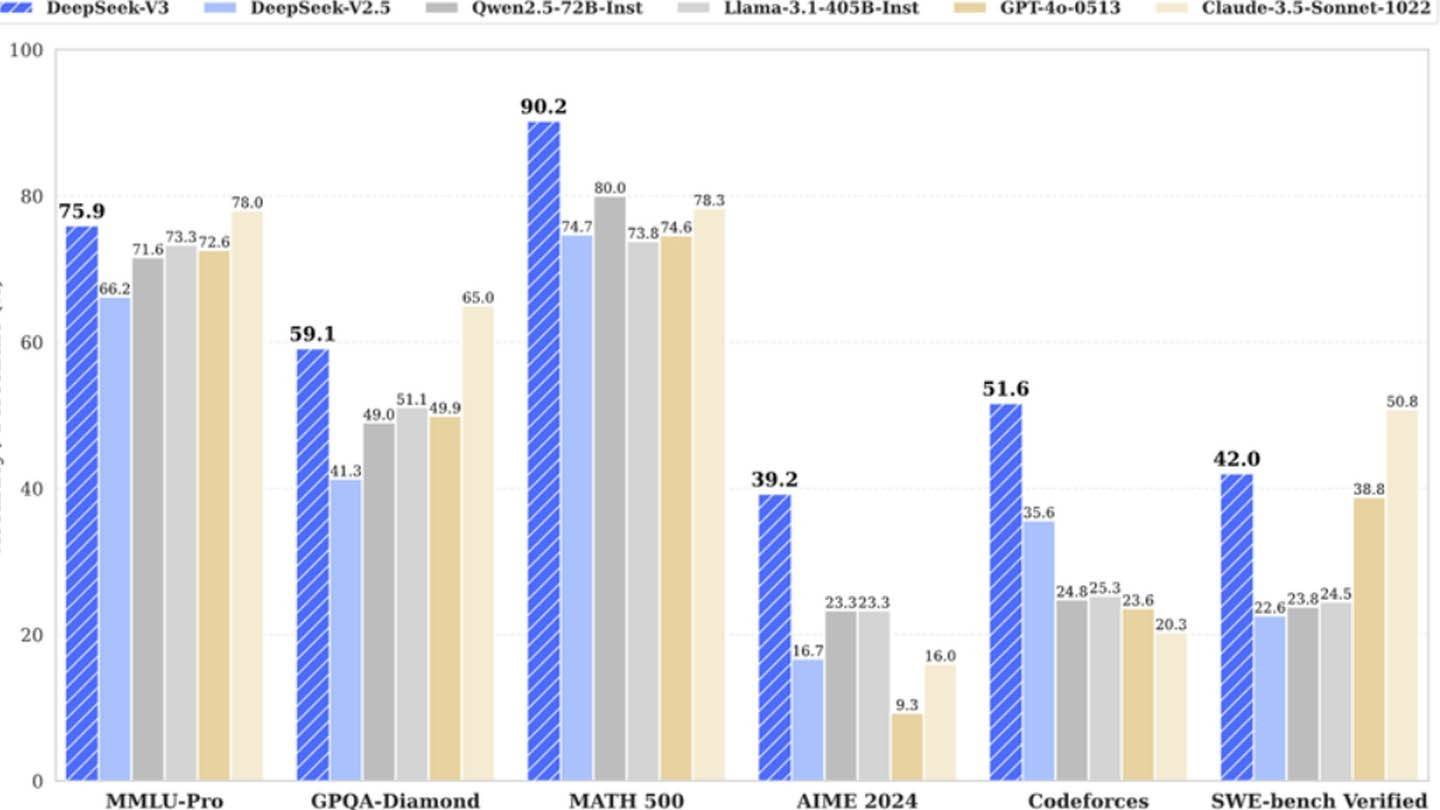

DeepSeek's new chatbot boasts a surprisingly capable AI, challenging industry giants and causing significant market ripples, including a notable drop in NVIDIA's stock price. Its introduction, "Hi, I was created so you can ask anything and get an answer that might even surprise you," accurately reflects its competitive edge. This success stems from a combination of innovative architecture and training methodologies.

Image: ensigame.com

Image: ensigame.com

Key technological advancements include Multi-token Prediction (MTP), which predicts multiple words simultaneously for enhanced accuracy and efficiency; Mixture of Experts (MoE), utilizing 256 neural networks (eight activated per token) in DeepSeek V3 for accelerated training and improved performance; and Multi-head Latent Attention (MLA), which repeatedly extracts key details to ensure crucial information isn't missed.

Image: ensigame.com

Image: ensigame.com

While DeepSeek initially claimed a remarkably low training cost of $6 million for DeepSeek V3 using 2048 GPUs, SemiAnalysis revealed a far more substantial infrastructure: approximately 50,000 Nvidia Hopper GPUs (including 10,000 H800, 10,000 H100, and additional H20 GPUs) spread across multiple data centers. This translates to a server investment of roughly $1.6 billion and operational expenses near $944 million.

Image: ensigame.com

Image: ensigame.com

DeepSeek, a subsidiary of High-Flyer, a Chinese hedge fund, owns its data centers, fostering control and rapid innovation. Its self-funded nature contributes to agility and swift decision-making. The company attracts top talent, with some researchers earning over $1.3 million annually, primarily from Chinese universities. The initial $6 million figure likely only reflects pre-training GPU costs, excluding research, refinement, data processing, and overall infrastructure expenses. DeepSeek's actual investment in AI development exceeds $500 million. Its compact structure, however, allows for efficient innovation compared to larger, more bureaucratic competitors.

Image: ensigame.com

Image: ensigame.com

DeepSeek's success highlights the potential of well-funded, independent AI companies to compete effectively. While its "revolutionary budget" claims are arguably exaggerated, the company's achievements are undeniably impressive, particularly when contrasted with the significantly higher costs of competitors. For example, DeepSeek's R1 model training cost $5 million, while ChatGPT4o cost $100 million. DeepSeek's success is a testament to substantial investment, technological breakthroughs, and a highly skilled team.